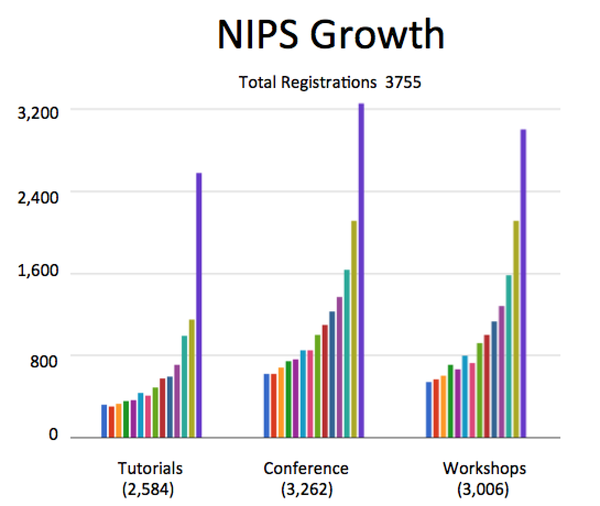

I attended NIPS two weeks ago, as it took place in Montréal from the 7th to the 12th of December. It was huge: according to the chairs, the number of attendees rose by 40% in one year, leading to about 3500 people. Yet, it did not feel crowded, thanks to the very effective organization of Montréal’s Palais des congrès.

Deep learning was the key point at this conference: although only 10% of papers were primarily concerned by deep learning, a lot of optimization and Bayesian optimization papers were related to deep learning in some way or another. As Zoubin Gharamani pointed out, the field is in a situation analogous to the one which prevailed 25 years ago, in the early 90s: neural networks were flourishing, a lot of efforts were devoted to training them, SVM were only being invented. In between, we have obtained a better understanding of what statistical learning means. Instead of finding the best architecture to do stuff, I think it’s high time we understand what “deep” learning means before going any further in this direction.

Some interesting papers

Here are some papers that I found interesting at this conference, mainly revolving around deep learning. I tried to group them by categories, although some of them could be attached to multiple categories:

Phase retrieval

Solving Random Quadratic Systems of Equations is Nearly as Easy as Solving Linear Systems

Efficient Compressive Phase Retrieval with Constrained Sensing Vectors

Attention models, more and more endowed with memory

Attention-Based Models for Speech Recognition

Inferring Algorithmic Patterns with Stack-Augmented Recurrent Nets

Deep generative models

Learning Wake-Sleep Recurrent Attention Models

A Recurrent Latent Variable Model for Sequential Data

Deep Generative Image Models using a Laplacian Pyramid of Adversarial Networks

Generative Image Modeling using Spatial LSTMs

Training Restricted Boltzmann Machine via the Thouless-Anderson-Palmer Free Energy

Optimization for Neural Networks

Beyond Convexity: Stochastic Quasi-Convex Optimization

Learning both Weights and Connections for Efficient Neural Network

Probabilistic Line Searches for Stochastic Optimization

Towards more and more complex networks (gates, recurrent connections, intermediate modules)

Parallel Multi-Dimensional LSTM, With Application to Fast Biomedical Volumetric Image Segmentation

Convolutional Neural Networks with Intra-Layer Recurrent Connections for Scene Labeling

Group invariance

Learning with Group Invariant Features: A Kernel Perspective

The future of AI

It appears that AI is once again a hype keyword. In some aspects, it actually looks like we’re in a science-fiction movie. This topic was particularly discussed at the Symposium “Brains, Minds and Machines”, which was chaired by Tomaso Poggio.

In general, at the conference, algorithmic issues were mainly discussed. AI is seen as a superstructure, independent of the material upon which it is built. I don’t know if it’s true or not; however, I think that NIPS - Neural Information Processing Systems - was partly founded in order to build machines inspired by biology, so-called “neuromorphic computing”. In this regard, the paper by IBM researchers on how to train their True North chip was particularly relevant and interesting. For embedded technology, future probably lies in this direction.

When it comes to higher order cognitive capabilities, Joshua Tenenbaum presented a recent work done in collaboration with deep learning people, which was pretty impressive. They developed a hierarchical generative model aimed at zero-shot learning. When presented a single instance of a foreign character, the computer is able to reproduce and recognize multiple examples of this character, with performances quite comparable to humans. Tenenbaum insisted on the forecoming meeting of cognitive reasoning with deep learning perception, and I think he might be right.

At the end of NIPS, the OpenAI initiative was announced. Funded by private donors (notably Elon Musk and Peter Thiel), it will be an open research lab focused on AI technology. I think it’s a very nice initiative, and another indicator of how hot the field is (the pledges involve around $1B). Let’s see what it gives!